[Ed. note: This review contains minor spoilers for the first three episodes of Ironheart season 1.]

Ironheart, Marvel’s spinoff of Black Panther: Wakanda Forever, has more in common with Isaac Asimov’s Do Androids Dream of Electric Sheep? than any MCU fan might guess. The existential question at hand: Can a machine truly feel or be human?

In the Disney Plus series, the young, Tony Star-esque hero Riri Williams resurrects her best friend Natalie through artificial intelligence. The implications go full galaxy-brain real quick. Natalie can speak, remember, project a lifelike holographic form, and engage with people just like a real human — but she’s not “alive” in the traditional sense.

While the idea of an artificial companion has long been a sci-fi staple, its real-world counterpart now comes with intense controversy. The newest forms of generative AI chatbots are poised to upend industries, eliminate jobs, and spread misinformation. Hollywood has responded awkwardly. Films like 2023’s The Creator and Jennifer Lopez’s run-and-gun Netflix action movie Atlas reflect a growing trend of pro-AI narratives from studios with major investments in the technology and a vested interest in its acceptance.

Ironheart is another stab at improving the tech’s image. What bothers me isn’t that viewers might watch the Marvel show and retreat to their computers to form a bond with a ChatGPT-fueled human replica, it’s that people already are doing it for their deceased loved ones. Ironheart, which one imagines Disney designed for mass audience and appeal, doesn’t grapple with that present.

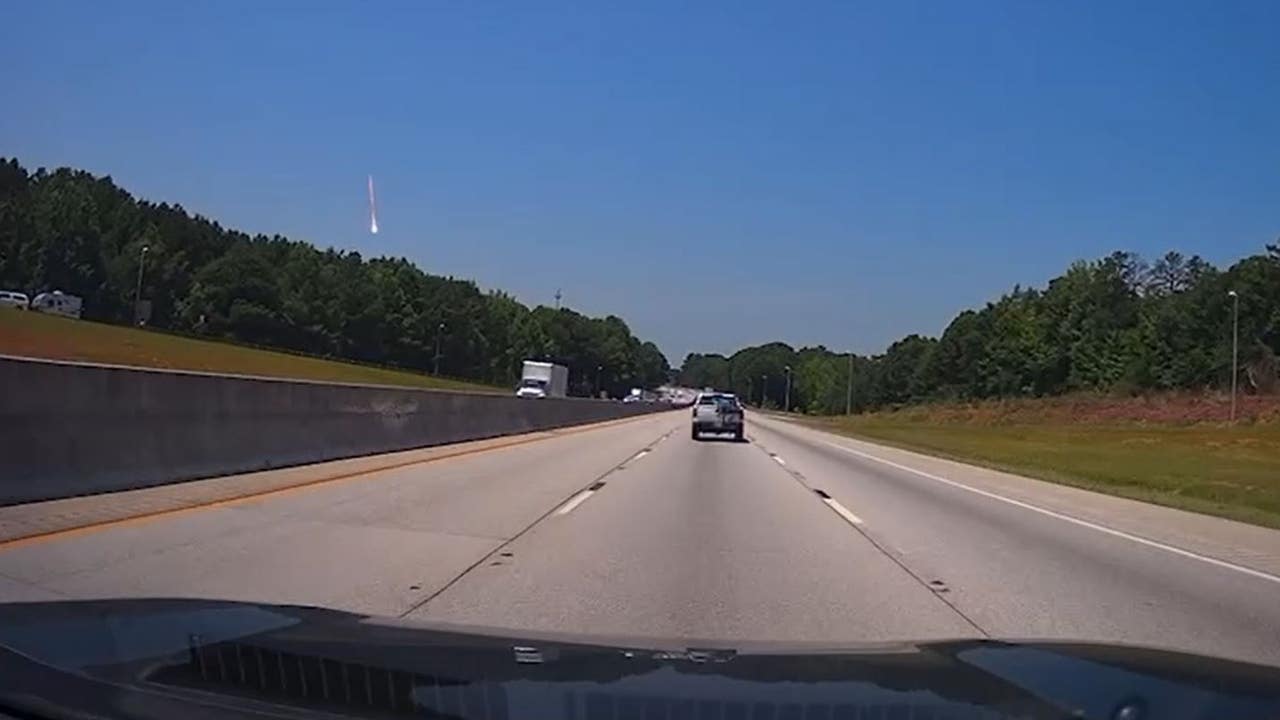

Photo: Jalen Marlowe

A few years before the events of the show, Riri witnessed a drive-by shooter kill her father and best friend. Riri, still processing those deaths, is implementing an AI interface in her suit when, after she falls asleep during the process, Natalie’s digital counterpart is born through a cybernetic glitch while the protagonist is dreaming of her deceased best friend. At first, she’s scared and apprehensive of a computer taking the form of Natalie. But as time goes on, the AI Natalie becomes a coping mechanism for Riri to come to terms with the murders, an event that led her to create her first suit as a means of protection. Although Riri is painfully aware that the Natalie she’s mourning is dead and that she is in the presence of her AI counterpart, it’s still a mechanism that helps Riri cope with her death and eventually heal from it.

None of this feels out of the realm of possibility. Chicago drive-bys take place much too often in real life, and ChatGPT is as easy to use as text messaging. But it’s a dark path for Marvel to casually Disneyify. There are already cases of people texting ChatGPT as if they’re friends, reports of teens and adults alike suffering delusions and coming to ChatGPT for answers or guidance.

Facing mounting regret from those who have turned to AI for closure, OpenAI has had to admit to the fallacies of its own creation. Earlier this year, in an email to The Star, the company said it recognizes that “ChatGPT can feel more responsive and personal than prior technologies, especially for vulnerable individuals […] We’re working to understand and reduce ways ChatGPT might unintentionally reinforce or amplify existing, negative behavior.”

Generative AI certainly has a place in society, particularly when it’s used to reduce menial labor, assist rather than replace, or even support the pursuit of justice. In a groundbreaking case reported by local outlet ABC 15, an Arizona family used an AI-generated version of their deceased loved one to deliver his own victim impact statement in court. Chris Pelkey, a 37-year-old Army veteran and avid fisherman, was killed in a suspected road rage incident on Nov. 13, 2021. Rolling Stone reports this marks the first known instance of AI being used to deliver a victim impact statement in a U.S. court. Ultimately, Riri intends to use her suit to serve justice, and if AI is a tool that makes the job easier and more efficient, it’s hard to argue its usefulness. But it isn’t a Swiss Army knife that can fix the human condition.

Photo: Jalen Marlowe

Later in Ironheart, Riri’s mother meets Natalie and, upon learning she’s an AI, engages her in conversation, astonished by how accurately Riri was able to “capture her spirit.” I found it hard to suspend disbelief that a grieving mother would so readily accept and interact with a digital replica. But since this is the MCU, it’s the kind of leap that’s easy to overlook. That same night, Riri’s mother asks if she could create an AI version of her late husband. Riri replies that she can’t, she doesn’t know how, but admits that if she could, she would.

Riri, the scientific mind behind the AI, understands its boundaries and potential risks, while her mother, lacking that expertise, is emotionally vulnerable to what feels real. But even one of the smartest characters in the current Marvel Cinematic Universe ends up falling for the allure of having her best friend back, despite knowing better.

That’s not hero behavior; it’s a sign of someone going through grief. Something that a conversation, relatability, or logic can’t cure. But if it was a crutch for someone as brilliant as Riri to lean against, what’s stopping someone from believing AI couldn’t do the same for them?