Artificial intelligence is no longer defined primarily by benchmark scores or parameter counts. It is defined by control.

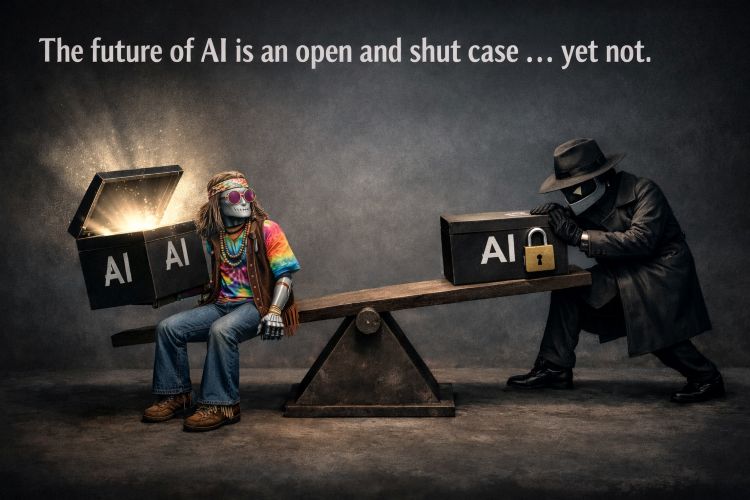

The question that now matters most is not who has built the largest model, but who can inspect it, who can modify it, who can deploy it under which conditions, and who ultimately bears responsibility when it fails. The debate between open source and closed AI systems is therefore not ideological theatre. It is a structural contest over authority, economic leverage, and long-term resilience.

At first glance, the distinction appears straightforward. Open source implies transparency and collective innovation. Closed systems imply proprietary control and commercial protection. In practice, the boundary is far less clean, and the implications for business leaders are more consequential than most marketing narratives suggest.

Open-source AI typically refers to models or frameworks released under licences that allow inspection, modification, and redistribution. The Open Source Initiative defines open-source software as code that permits redistribution and access to source material under defined licensing terms.[1] Within AI, however, the term is applied inconsistently. Some developers release model weights but restrict commercial use. Others publish inference code but retain control over training datasets. In many cases, the architecture may be visible while the training corpora remain undisclosed.

Recent examples illustrate this spectrum. Meta released Llama 2 with publicly accessible weights under a custom license.[2]Mistral has released open-weight models under Apache licensing.[3]Hugging Face hosts thousands of models that can be downloaded, inspected, and modified.[4]These developments have undeniably expanded access to advanced capabilities and accelerated experimentation across industries.

Yet openness does not automatically equate to transparency in the governance sense. A model’s weights may be available, but the provenance of its training data, the filtering decisions applied during curation, and the safety fine-tuning layers introduced after pre-training may remain partially opaque. The ability to inspect parameters does not necessarily reveal the institutional processes that shaped them.

Closed systems operate under a different logic. Here, the model remains proprietary, and access is granted through application programming interfaces (APIs) governed by contractual terms. OpenAI’s GPT-4 technical report limited disclosure of architecture and training details, citing safety and competitive considerations.[5]Anthropic and Google DeepMind similarly provide access to advanced models without publishing full training datasets or weights.[6][7]In these configurations, authority over updates, safety layers, and deployment policies remains centralized within the provider.

For enterprises, this centralization creates both clarity and dependence. A closed system offers a defined counterparty responsible for updates and maintenance, which simplifies accountability in contractual terms. At the same time, it embeds reliance on external decision-making regarding pricing, feature access, rate limits, and model evolution. Switching costs can become significant once workflows integrate deeply into a specific API environment.

Security considerations often shape this debate. White House voluntary AI commitments emphasized red-team testing, watermarking, and controlled deployment for advanced models.[8]The concern is not abstract. Advanced models, if misused, can amplify misinformation, automate cyber exploitation techniques, or accelerate biological risk research. Centralized control offers a mechanism to restrict access and deploy mitigations rapidly when vulnerabilities are discovered.

The European Union’s AI Act introduces structured obligations for providers of general-purpose AI models, including technical documentation, risk mitigation, and transparency requirements.[9]While the act does not prohibit open models, it establishes compliance burdens that may be easier for centralized providers to manage. Open models distribute both capability and compliance responsibility more broadly.

Innovation dynamics further complicate the picture. Historically, open-source ecosystems have accelerated technological development. Linux and Apache became foundational infrastructure precisely because thousands of contributors could inspect and refine them collectively.[10]In AI, open-weight models have enabled domain-specific fine-tuning, localized deployment, and rapid prototyping by startups and research groups that lack access to frontier-scale training budgets.

However, the training of state-of-the-art large-language models remains capital intensive. The Stanford AI Index Report documents the increasing concentration of frontier model development among a small number of well-resourced firms.[11]Open ecosystems often extend and adapt breakthroughs that originated within proprietary research environments. The competitive frontier, at least for now, remains heavily capitalized and largely closed.

Economic leverage is therefore central to the decision. Closed API providers maintain control over pricing structures and feature roadmaps, shaping enterprise cost exposure over time. Open models, when deployed internally, may reduce per-query costs but transfer infrastructure responsibility to the enterprise, including compute management, security hardening, and regulatory documentation.

Transparency, frequently cited as the primary virtue of open systems, also requires nuance. Publicly available weights allow independent researchers to evaluate model behaviour, identify bias patterns, and test robustness. Academic scrutiny increases under open conditions. Yet unrestricted publication can also enable malicious replication of vulnerabilities. Transparency does not eliminate risk; it redistributes it.

Regulatory frameworks increasingly focus on functional risk rather than ideological openness. The EU AI Act regulates systems based on use case classification and risk profile, not simply on whether a model is open or closed.[12]Liability attaches to providers and deployers depending on context. If an enterprise fine-tunes an open model for a high-risk application, it may assume regulatory obligations equivalent to those of a proprietary provider.

The geopolitical dimension cannot be ignored. Open-weight releases from Chinese technology firms have expanded global access to advanced AI capabilities.[13]At the same time, U.S. firms continue to dominate proprietary frontier systems. Governments face a strategic balancing act between encouraging innovation ecosystems and preserving national technological advantage. AI, like cryptography and semiconductor design before it, has become a dual-use strategic domain.

For business leaders, the decision framework must therefore move beyond ideological positioning. The relevant dimensions are practical and structural, and they rarely point unanimously in one direction.

Closed systems may simplify vendor management and provide consolidated safety updates. Open systems may enhance customization and reduce long-term lock-in risk. Many enterprises are already adopting hybrid architectures in which proprietary frontier models are accessed via API, while open models are fine-tuned internally for domain-specific tasks, and safety or retrieval layers are implemented independently.

Ultimately, the debate reduces to a question of control. Open systems distribute capability and responsibility. Closed systems concentrate authority and contractual clarity. Both models require governance discipline, risk assessment, and clear accountability structures. Neither eliminates exposure to misuse or error.

Business leaders who frame the decision solely in terms of cost per token will miss the deeper structural implications. The more durable inquiry is this: where does authority reside in your AI architecture, and can it be adjusted if circumstances change. That question determines resilience far more reliably than parameter count or marketing narrative.

References

[1] Open Source Initiative. The Open Source Definition.

[2] Meta AI. Llama 2: Open Foundation and Fine-Tuned Chat Models.

[3] Mistral AI. Open models under Apache licensing.

[4] Hugging Face. Model Hub.

[5] OpenAI. GPT-4 Technical Report.

[6] Anthropic. Claude model documentation.

[7] Google DeepMind. Gemini technical overview.

[8] The White House. Voluntary AI commitments from leading companies.

[9] European Union. EU AI Act final text.

[10] The Linux Foundation. History of Linux.

[11] Stanford HAI. AI Index Report 2024.

[12] European Union. EU AI Act final text.

[13] Reuters. China releases open large language models.

(Mark Jennings-Bates, BIG Media Ltd., 2026)